The Three Software Paradigms: Why Every AI Builder and Investor Must Understand Karpathy's Framework

Navigating Computing's Greatest Transformation Since 1954: From Terminal Windows to Autonomy Sliders

Technology industries are defined by pivotal moments of strategic reinvention. When Andrej Karpathy, former Director of AI at Tesla, recently keynoted the Y Combinator AI Startup School, he articulated a framework that every AI product developer and investor needs to internalize: we are witnessing the most fundamental transformation in software since its inception 70 years ago.

More critically, we are merely in the "1960s" of this new computing era—a sobering reality check for those racing to build or fund the next AI unicorn.

The Strategic Inflection Point: From Code to Weights to Prompts

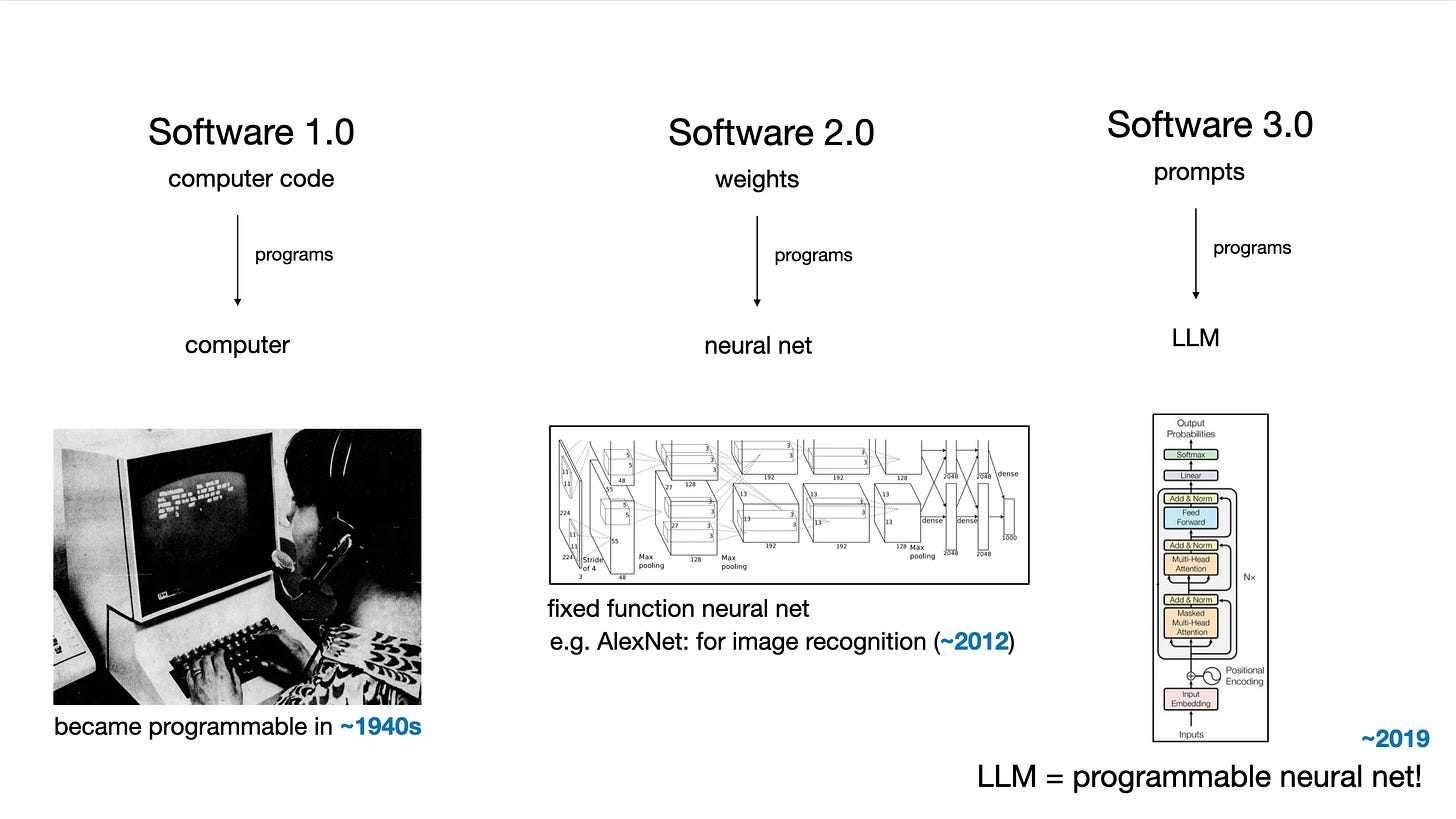

Karpathy's framework elegantly captures three distinct programming paradigms that now coexist in our technological landscape:

Software 1.0: The Classical Era Traditional programming where humans write explicit instructions in languages like Python or C++. This is the GitHub universe—millions of repositories containing human-crafted logic.

Software 2.0: The Neural Network Revolution Here, the "code" consists of neural network weights, not written but optimized through data and training. Hugging Face has emerged as the GitHub equivalent for this paradigm. At Tesla, Karpathy witnessed this transformation firsthand as neural networks literally "ate through" the autopilot's C++ codebase, replacing traditional algorithms with learned representations.

Software 3.0: The Natural Language Disruption The game-changer: Large Language Models programmable through natural language prompts. For the first time in computing history, English has become a programming language. This isn't merely an incremental improvement—it's a fundamental reimagining of human-computer interaction.

Strategic Implications for Product Development

The emergence of Software 3.0 creates unprecedented opportunities and challenges:

• Democratization of Development: "Vibe coding" enables non-programmers to build functional applications • Paradigm Fluency: Successful products will seamlessly blend all three paradigms • Infrastructure Transformation: Existing digital infrastructure requires fundamental adaptation

Understanding LLMs: Beyond the Utility Metaphor

Many investors and builders make the critical error of viewing LLMs as simple utilities—commoditized services differentiated only by price and performance. Karpathy's analysis reveals a more nuanced reality.

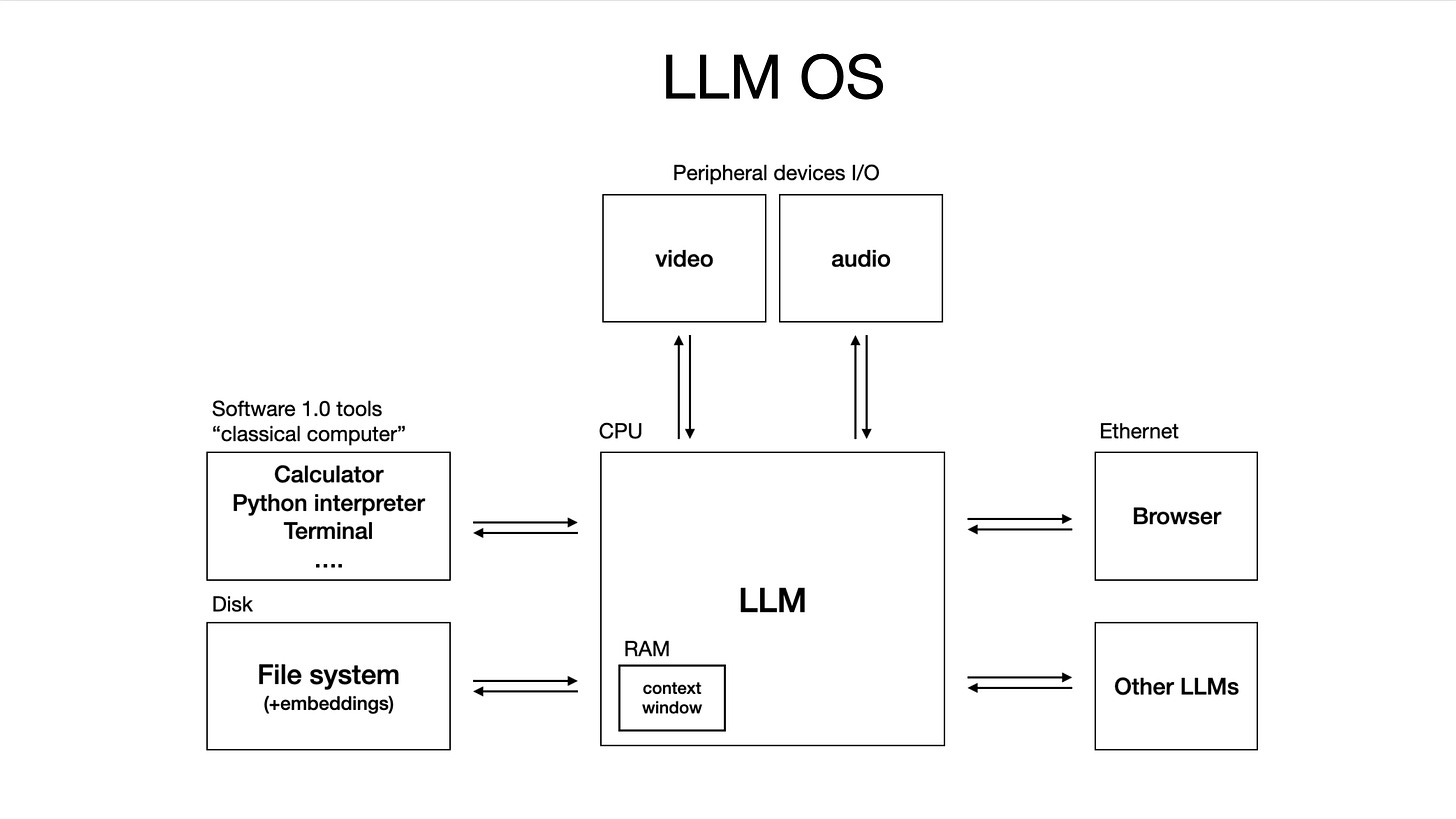

The Operating System Paradigm

LLMs are evolving into complex software ecosystems that mirror the structure of operating systems:

Architectural Parallels:

LLM core = CPU equivalent

Context windows = Working memory

Tool integration = System calls and APIs

Multi-modal capabilities = Device drivers

Market Structure Implications:

Consolidation around few major providers (OpenAI, Anthropic, Google)

Open-source alternatives emerging (Llama ecosystem as the "Linux" of LLMs)

Application layer opportunities expanding rapidly

This framing has profound implications for investment strategy. Just as the OS wars of the 1980s-90s created massive value in the application layer, we're entering a similar phase with LLM platforms.

The Partial Autonomy Imperative: Building Products That Actually Work

Perhaps Karpathy's most actionable insight concerns product design philosophy. Drawing from his five years building Tesla's autopilot, he articulates why "partial autonomy" represents the optimal approach for current AI products.

Case Study: The Anatomy of Successful LLM Applications

Examining leaders like Cursor (coding) and Perplexity (search) reveals consistent design patterns that separate winners from also-rans in the AI application space.

The Email Management Paradox: A Tale of Two Approaches

Consider the contrasting philosophies of two AI email assistants: Jace and Cora. Their divergent approaches illuminate why Karpathy's principles matter for product success.

Jace: The Cursor of Email Jace explicitly models itself after Cursor's successful design patterns:

Three-pane interface: Email categorization (left), content and editable draft (center), chat-based instructions (right)

Real-time verification loop: Users see and modify AI-generated responses before sending

Multi-tool orchestration: Integrates calendar analysis, web search, and file processing

Contextual learning: Adapts to individual communication styles while maintaining human oversight

Users report saving hours per week on email with minimal editing required—the hallmark of effective human-AI collaboration.

Cora: The Scheduled Summary Approach Cora takes a fundamentally different path:

Passive consumption model: Delivers twice-daily email summaries

No real-time interaction: Users receive briefings at 8 AM and 3 PM

Limited verification opportunities: Drafted responses exist but lack immediate editing interface

Philosophy-driven: Forces users into a specific email methodology

The market response reveals which approach resonates. While Cora frames itself as "not an 'AI email assistant'" but rather an "email methodology," Jace users describe it as transformative for actual productivity. The difference? Jace enables the rapid generation-verification loop that Karpathy identifies as critical.

Universal Success Patterns

1. Context Management Sophistication The LLM handles complex context automatically, abstracting away the complexity from users while maintaining transparency.

2. Multi-Model Orchestration Successful apps don't rely on a single LLM but orchestrate multiple models for specialized tasks—embeddings for search, generation for drafting, analysis for categorization.

3. Purpose-Built Interfaces Generic chat interfaces are insufficient. Cursor's diff visualization, Perplexity's source citations, and Jace's three-pane layout exemplify how domain-specific UIs enable rapid human verification.

4. The Autonomy Slider Users control the level of AI involvement, from minor assistance to full automation. This isn't a compromise—it's a feature that acknowledges the current reality of AI capabilities while building toward greater autonomy.

The Verification Bottleneck

Karpathy's key insight: the human verification loop is the critical constraint in AI-assisted workflows. Products must optimize for:

Rapid visual assessment (leveraging human "GPU" for pattern recognition)

Incremental, auditable changes (avoiding overwhelming diffs)

Failure-tolerant workflows (assuming AI errors as default)

The Jace-Cora dichotomy illustrates this perfectly. Cora's twice-daily briefings break the verification loop, forcing users to context-switch hours after emails arrive. Jace, by contrast, enables immediate verification and editing—maintaining the flow state that defines productive work.

This isn't merely a UX preference; it's a fundamental requirement for human-AI collaboration. As Karpathy observed from Tesla's autopilot development, the path to full autonomy requires thousands of iterations of the human-AI loop. Products that optimize this loop will compound their advantages over time.

The Infrastructure Revolution: Building for a Multi-Agent Future

Forward-thinking organizations must recognize that agents represent a new class of digital infrastructure consumers, distinct from both humans (who use GUIs) and traditional computers (which use APIs).

Strategic Infrastructure Adaptations

Documentation Revolution:

Markdown-first documentation (LLM-readable)

Replacement of "click here" instructions with executable commands

Early movers like Vercel and Stripe gaining competitive advantage

New Protocols and Standards:

llm.txt files for domain-specific LLM instructions

Anthropic's Model Context Protocol for agent communication

Ingestion tools that transform human-readable content to LLM-optimized formats

The Competitive Imperative: Organizations that fail to adapt their digital infrastructure for agent consumption will find themselves at a severe disadvantage as AI-assisted workflows become standard.

Investment Thesis: The Decade of Agents

Karpathy's framework suggests several key investment principles:

1. Realistic Timeline Expectations

The "2025 is the year of agents" narrative is dangerously optimistic. Karpathy's experience with self-driving—a perfect demo in 2013, still being perfected in 2025—offers a sobering parallel. Plan for a decade-long transformation, not a two-year disruption.

2. Augmentation Over Automation

The highest-value opportunities lie in "Iron Man suits" (augmentation tools) rather than "Iron Man robots" (full automation). Products that enhance human capabilities while maintaining human oversight will dominate the next five years.

3. Infrastructure Plays

Just as the internet boom created value in infrastructure companies, the AI transformation will reward those building the scaffolding for agent-based computing.

4. Cross-Paradigm Competence

Winners will fluidly navigate between Software 1.0, 2.0, and 3.0, choosing the right tool for each component. Pure-play AI companies may struggle against those with hybrid approaches.

The Path Forward: Strategic Imperatives

For product builders and investors navigating this transformation, several principles emerge:

Build for the Verification Loop Every product decision should optimize the speed and accuracy of human verification. This isn't a temporary constraint—it's a fundamental design principle for the next decade.

Embrace Partial Autonomy Products should ship with an "autonomy slider," allowing users to dial up AI involvement as the technology matures and trust develops.

Invest in Infrastructure Adaptation Whether building or investing, prioritize companies that understand agents as first-class digital citizens requiring purpose-built infrastructure.

Maintain Historical Perspective We're witnessing the birth of a new computing paradigm. Like the transition from mainframes to PCs, the winners won't be determined by who moves first, but by who best understands the fundamental shifts underway.

Conclusion: The 1960s of a New Era

Karpathy's framework reveals that we stand at an extraordinary inflection point. For the first time in 70 years, the fundamental nature of software is changing. We have three distinct programming paradigms, a new class of digital infrastructure consumers, and the democratization of software development through natural language.

The contrast between Jace and Cora exemplifies why understanding these principles matters. Jace, with its Cursor-inspired interface and tight verification loops, has users reporting hours saved weekly. Cora, despite its elegant concept of email-as-narrative, struggles against the fundamental constraint of broken verification loops. The market's verdict is clear: AI products succeed when they enhance human agency, not when they abstract it away.

Yet we must temper our enthusiasm with realism. We are in the 1960s of this new computing era—a time of immense possibility but also fundamental limitations. The organizations that thrive will be those that understand both the transformative potential and the current constraints of these technologies.

For investors, the implications are stark: evaluate AI companies not by their ambitions but by their understanding of human-AI collaboration. Look for products with clear autonomy sliders, purpose-built interfaces for verification, and infrastructure designed for agent interaction. Avoid those chasing full automation without establishing the foundational loops of augmentation.

For builders, the message is equally clear: your north star should be the speed and accuracy of the human verification loop. Every design decision should ask: does this make it easier for humans to verify and guide AI output? Does our infrastructure speak to both human and agent consumers? Are we building Iron Man suits or chasing Iron Man robots?

The future belongs not to those who chase fully autonomous agents, but to those who thoughtfully build the bridges between human intelligence and artificial capability. In this new landscape, understanding Karpathy's framework isn't just helpful—it's essential for anyone serious about building or investing in the AI-powered future.

The question isn't whether this transformation will reshape every industry—it's whether you'll be among those who understand it deeply enough to capture the value it creates.